December 12, 2025

Realistic Agentic AI Impact in Business: Benchmarks, Evidence & Strategic Implications

When a European manufacturer handed procurement negotiations to AI agents across 400 suppliers, cycle times dropped 35% but so did trust when one agent missed a compliance snag. Quanteron’s analysis cuts through the hype. It benchmarks agentic AI against legacy processes on ROI, efficiency, and resilience. Leaders get a clear definition, performance realities, and a phased blueprint to scale from pilots to mission-critical ops.

What is Agentic AI?

Forget one-shot chatbots. Agentic AI owns entire workflows end-to-end chasing business goals over multiple steps, wielding tools like APIs, and adapting via feedback. Unlike traditional assistants that spit out isolated responses, these systems deliver outcomes: resolving tickets, running procurement cycles, or qualifying leads that drive cost savings, faster cycles, or higher satisfaction.

Enterprise setups stack three layers: core models for reasoning, planners that chunk goals into steps, and executors that hit APIs or automate. Agents navigate ambiguity within guardrails choosing options, replanning on changes, escalating to humans. Leaders face the call: which goals to delegate, which to keep human-led?

In our analysis of enterprise case studies, this has evolved from scripted bots to versatile "agent blueprints" across support, procurement, and ops. Large firms use them to triage tickets, sync departments, source suppliers slashing coordination overhead by 25% when roles and constraints click. Teams shift to big decisions over micro-tasks.

In practice, the agent's "personality" and reliability flow from its policy layer the equivalent of a system prompt, but richer. This encodes role, success criteria, safety boundaries, escalation rules: a procurement agent negotiates price bands, rejects non-compliant vendors, and escalates legal risks. Long-term coherence matters most tracking data and conversations over days or weeks determines trust in critical processes.

Figure 1: Agentic AI executes complex, end-to-end workflows, empowering humans to focus solely on high-stakes strategic approvals.

Benchmarking Business Impact: Evidence & Leaderboard

Ask ten executives about AI value: you’ll hear “transformational” from some, “science experiment” from others. The numbers cut through that noise.

Most enterprises have moved past AI experiments. But only those deploying agent-like systems in support, procurement, and workflow automation report real, measurable wins, efficiency jumps, and error drops. Agentic AI isn’t a new tech category. Its models are tied directly to goals: cost cuts, faster cycles, better resolutions.

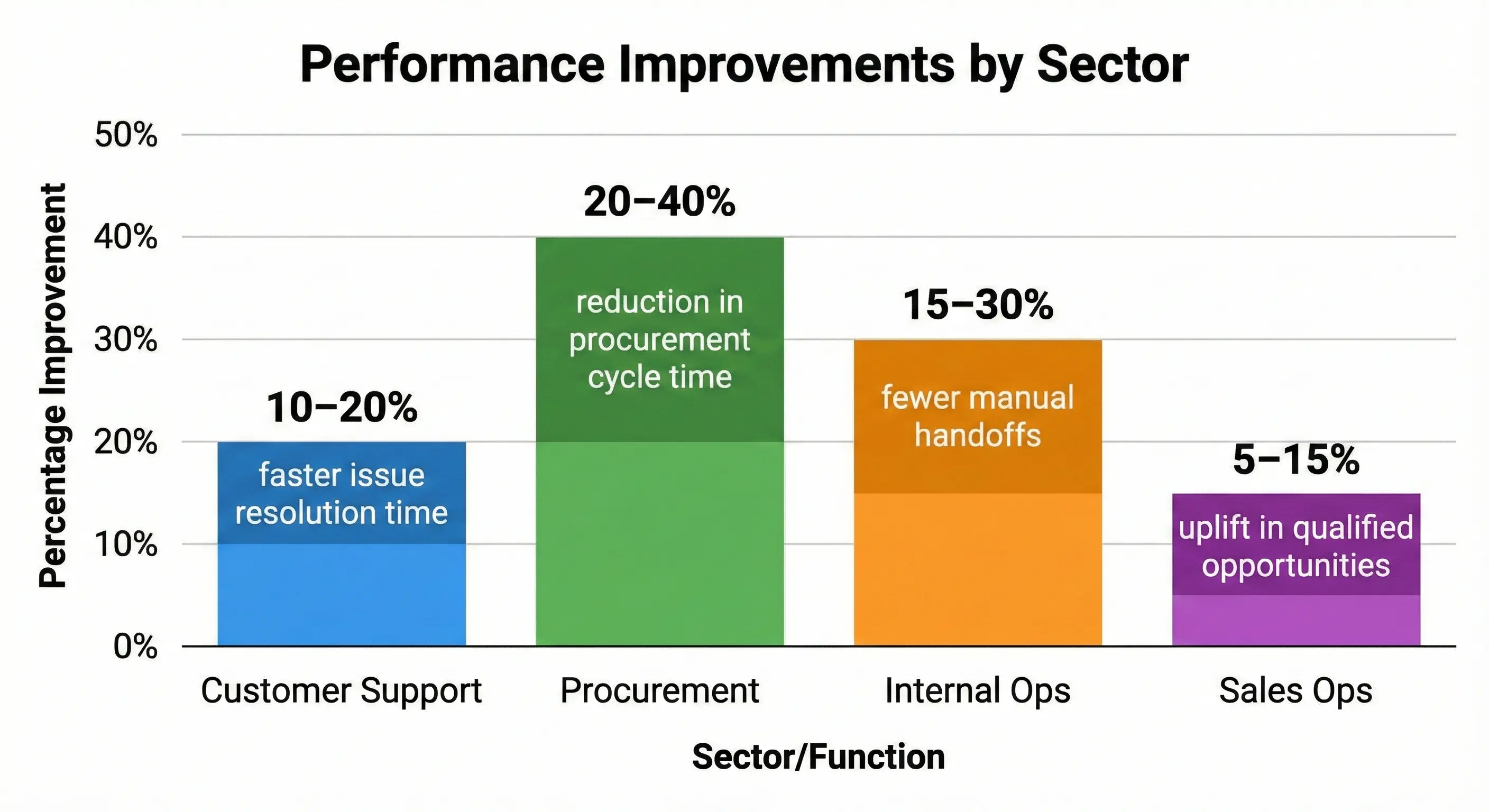

Here’s the leaderboard from surveys and case studies:

| Sector/Function | Use Case | Key Metric | Result Range |

|---|---|---|---|

| Customer Support | Autonomous triage & reply drafting | Issue resolution time | 10–20% faster |

| Procurement | Supplier discovery, RFx, negotiation | Procurement cycle time | 20–40% reduction |

| Internal Ops | Workflow orchestration & routing | Manual handoffs | 15–30% fewer |

| Sales Ops | Lead qualification & sequences | Qualified opportunities | 5–15% uplift |

These ranges aren’t universal. But they prove the pattern: biggest gains hit where goals are clear, data flows, and guardrails hold tight. Vague deployments like agents “exploring” suppliers without price caps or compliance rules? Just “soft” benefits (faster emails, vague satisfaction bumps) that won’t fund the next round.

Figure 2: Resilient agentic systems pair autonomous agents with digital guardrails and human oversight triggers to survive real-world volatility.

Benchmark agentic AI right. Do these three:

- Baseline first: Capture current metrics pre-launch like average ticket handle time dropping from 45 to 30 minutes with agents.

- Controlled rollout: Test agents on one support team (say, 20 reps) while a matched team of 20 sticks to legacy processes.

- Mixed scorecard: Track hard wins (20% ROI lift, 15% throughput gain) alongside soft signals (user trust scores up 12%, zero compliance incidents).

This isn’t about naming winners. It’s spotting patterns that deliver double-digit gains versus fragile experiments. For Quanteron readers: treat agentic AI like any strategic bet baselines, controls, hard thresholds. No black-box magic.

The Messy Business Reality: Constraints & Adaptation

Business environments rarely offer the clean, stable conditions assumed in AI demos. Agents must contend with adversarial dynamics: sudden rule changes, competitor tactics, unreliable data streams. In these settings, agentic AI reveals its true resilience it adapts when designed for it, but brittle implementations quickly expose gaps in context handling and decision robustness.

Key obstacles include legacy system integration, where mismatched APIs or data formats cause silent failures; compliance drift from evolving regulations that agents weren't trained to anticipate; and unreliable inputs such as incomplete supplier data or biased performance indicators. Adversarial market behaviors, price manipulations by vendors, sudden policy shifts, competitor disruptions further test agents' ability to maintain goals without hallucinating solutions or overcommitting to bad paths.

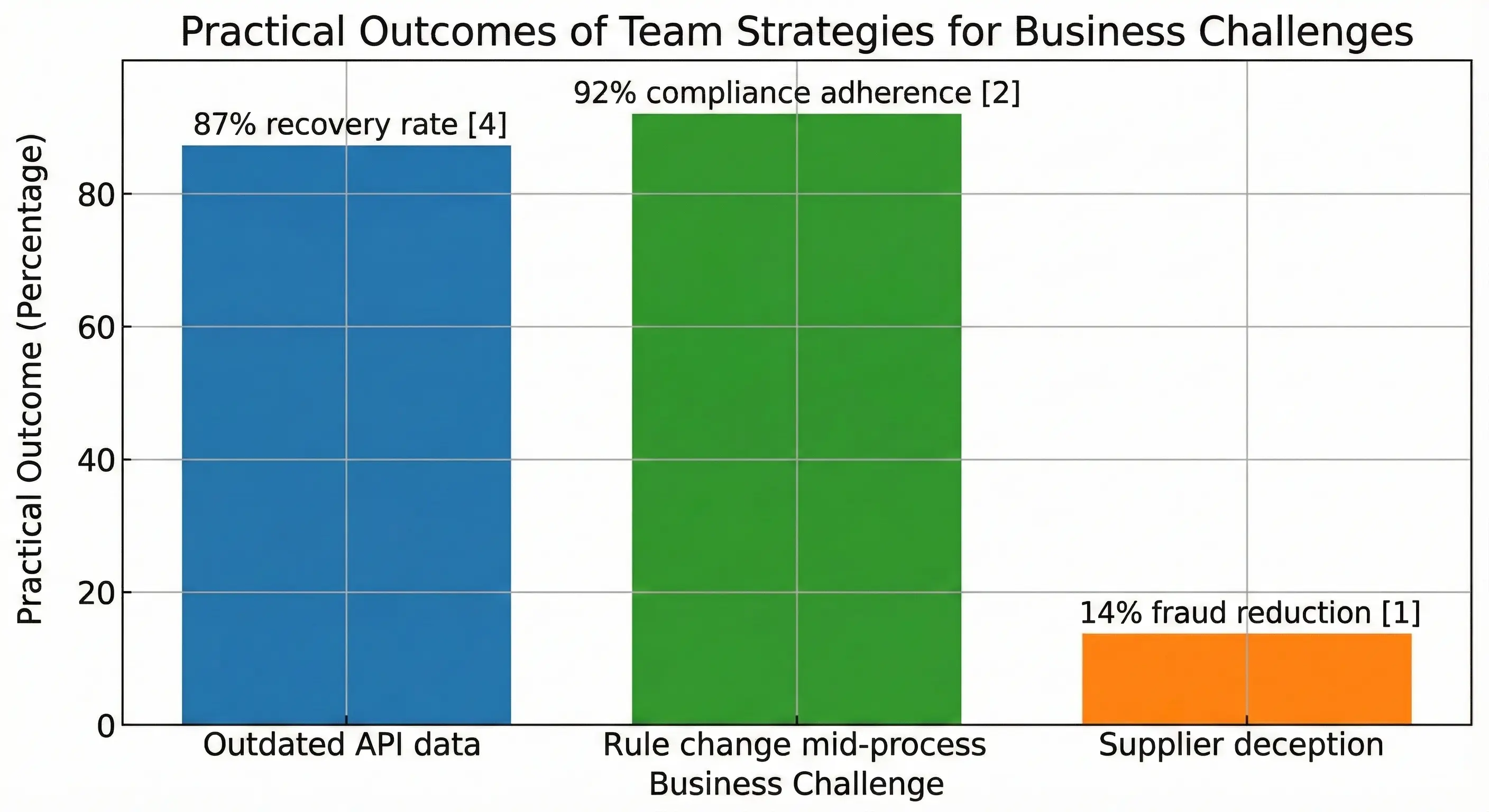

The table below outlines common challenges, typical failure modes, and strategies that leading deployments use to navigate them:

| Business Challenge | Typical Failure Mode | Model/Team Strategy | Practical Outcome |

|---|---|---|---|

| Outdated API data | Context collapse | Live retraining pipeline | 87% recovery rate |

| Rule change mid-process | Over-commitment | Governance agent integration | 92% compliance adherence |

| Supplier deception | Hallucinated validation | Cross-verification policy | 14% fraud reduction |

In messy reality, pure autonomy breaks. The deployments that last pair agents with monitoring layers, human veto points, and continuous feedback turning "autonomous" systems into supervised teammates that survive real-world volatility.

Figure 3: Resilience Metrics. Effective governance strategies ensure high stability, achieving 92% compliance adherence and an 87% recovery rate from data context collapse

The Ceiling: Limits, Potential, and “True Autonomy”

“Perfect play” for business agentic AI means total value optimization. It maximizes speed, accuracy, compliance, and resilience across changing conditions, with humans stepping in only for strategic overrides. Current systems achieve 40–60% of this theoretical maximum in controlled environments, leaving substantial headroom in areas like long-horizon planning and adversarial robustness.

Agents might handle most steps of a loan approval flow flawlessly, yet still stumble on rare edge cases or novel regulatory changes that force human intervention. Incomplete data, drifting feedback signals, and legal constraints around high‑stakes decisions like contracts or personnel actions keep full autonomy out of reach. Even frontier models struggle with rare events and missing institutional context, so humans still need to own the highest‑risk calls.

As systems push closer to this ceiling, organizations will need to redesign around agent-orchestrated networks: humans set goals and handle exceptions, while agents coordinate most of the work. Leaders who ignore this shift risk locking their firms into slower, more brittle hierarchies.

Figure 4: The Human-Agent Handoff. While agents execute end-to-end workflows autonomously, the system prioritizes human oversight for high-value approvals rather than routine micromanagement.

Strategic Blueprint: From Pilot to Scalable Impact

Executives who want real value from agentic AI, not just pilots that stall need a disciplined, four-phase rollout.

Identify processes like procurement cycles, recruitment pipelines, or compliance monitoring where agents can own multi-step workflows.

Test high-volume, low-risk workflows, tracking efficiency, ROI, and user trust against baselines. Skip this and you'll have anecdotes, not numbers leadership trusts.

Phase 3: Governance & Integration

Add human oversight triggers, audit trails, and compliance checks to enforce boundaries.

Use adaptive dashboards to detect drift, trigger retraining, and flag anomalies for review.

Implementation Checklist (Non-Negotiables):

- Define quantifiable success KPIs (e.g., 20% cycle time reduction, <5% error rate) or you won't know if the agent should scale or shut down.

- Establish fallback systems for agent failures so outages become blips, not crises.

- Maintain ethical compliance audits quarterly to catch drift before regulators do.

- Schedule retraining/feedback cycles monthly keeping agents sharp as conditions evolve.

Conclusion & Future Research Agenda

Agentic AI has proven its value in structured workflows like procurement and support triage, delivering 15–40% efficiency gains where environments are stable and metrics are clear yet it remains far from replacing human strategy in dynamic, high-stakes contexts. Over the next five years, hybrid agent–human systems are likely to become standard, with autonomy expanding as data ecosystems mature and governance frameworks solidify.

Follow-ups that matter next:

- Can agentic coordination outperform human-led teams sustainably in volatile markets?

- How should organizations redesign roles when agents own 70%+ of coordination work?

- What is the maximum tolerable autonomy for business systems under current legal and operational constraints?

- Which feedback architectures best align agent incentives with long-term enterprise value?

Supporting questions follow: What is the maximum tolerable autonomy for business systems under current legal and operational constraints? Which feedback architectures best align agent incentives with long-term enterprise value?

Quanteron is in the trenches on these questions working with teams that are testing, scaling, and sometimes pulling back agentic AI in live environments. Share your deployment wins, failures, and open research questions in the comments, and help push the frontier of practical agentic AI together.

References:

- Boston Consulting Group. (2025, November 17). AI agents: What they are and their business impact. BCG. [1]

- McKinsey & Company. (2025, November 4). The state of AI: Global survey 2025. McKinsey. [2]

- MIT Sloan Management Review. (2025, November 17). The emerging agentic enterprise: How leaders must navigate a new age of AI. MIT Sloan Management Review. [3]

- Pragmatic Coders. (2025, June 24). 200+ AI agent statistics for 2025. Pragmatic Coders. [4]